Reinforcement Learning: Supercharging AI Agent Training for Business Automation

Introduction: The Rise of Intelligent AI Agents

Did you know that ai agents can now automate tasks previously thought impossible? The rise of intelligent ai agents is transforming business automation, but traditional training methods are falling short.

Businesses are under increasing pressure to improve efficiency and reduce costs. As a result, they are actively seeking automation solutions to streamline operations. Ai agents are becoming essential for handling complex tasks, from customer service to data analysis.

- Diverse Applications: Ai agents are now capable of managing and optimizing cloud spending. They can also personalize marketing efforts by tailoring recommendations to individual users based on their interactions.

- Adaptability: The demand for adaptable and intelligent ai agents is driving the adoption of advanced training techniques that allow them to quickly adjust to changing environments.

Traditional ai training methods, such as supervised and unsupervised learning, have several limitations that hinder their effectiveness in real-world applications.

- Supervised Learning: This method requires extensive labeled datasets, which can be expensive and time-consuming to create.

- Unsupervised Learning: This may not be suitable for tasks with specific goals or desired outcomes.

- Adaptability Issues: Traditional methods often struggle to adapt to dynamic and unpredictable real-world environments.

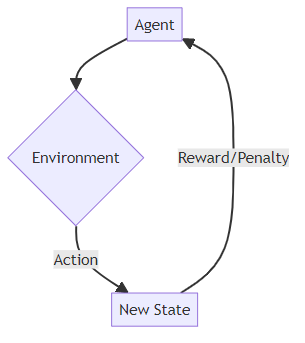

Reinforcement Learning (RL) offers a powerful approach to training ai agents through trial and error. RL enables agents to learn optimal strategies by interacting with an environment and receiving rewards or penalties. According to NVIDIA, RL is based on the Markov Decision Process (MDP), where agents choose actions based on the current state and the environment responds with a new state and a reward.

- Complex Environments: This method is particularly well-suited for complex, dynamic, and uncertain environments where traditional methods fall short.

- Trial and Error: According to AWS, RL mimics the trial-and-error learning process that humans use to achieve their goals.

Now that we've introduced the rise of intelligent ai agents and the role of Reinforcement Learning, let's delve into the fundamental concepts behind RL.

Understanding the Fundamentals of Reinforcement Learning

Did you know that ai agents can learn to play complex games like chess without any human intervention? Reinforcement Learning (RL) makes this possible by enabling agents to learn through trial and error, much like humans do.

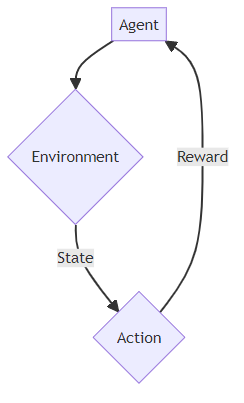

At the heart of RL are five key elements that define how an agent interacts with its world:

- Agent: This is the ai entity that makes decisions. Think of it as the player in a game, or the controller of an automated system.

- Environment: The world in which the agent operates. This could be a game, a simulation, or a real-world setting like a warehouse or a financial market.

- State: The current situation or configuration of the environment. For example, in a game, the state might include the positions of all the pieces on the board.

- Action: A step taken by the agent to navigate the environment. In a robotics application, this could be a movement command.

- Reward: A signal (positive or negative) that indicates the desirability of an action. Rewards guide the agent towards optimal behavior.

The Markov Decision Process (MDP) provides a mathematical framework for modeling decision-making in sequential environments. It's the backbone behind RL algorithms, defining the rules of the game:

- It defines the state space, which includes all possible states the environment can be in.

- It defines the action space, which includes all possible actions the agent can take.

- It defines the transition probabilities, which determine how the environment changes when the agent takes an action. These probabilities are crucial because they inform the agent about the likely outcomes of its actions, allowing it to plan ahead.

- Finally, it defines the reward function, which specifies the reward the agent receives for each action taken in each state. This function is vital as it directly guides the agent's learning process by defining what constitutes success or failure.

Understanding MDP is crucial for designing and implementing effective RL algorithms.

One of the biggest challenges in RL is balancing exploration and exploitation:

- Exploration: Trying new actions to discover potentially better strategies.

- Exploitation: Using the current best strategy to maximize immediate rewards.

For example, imagine a retail company using RL to optimize its pricing strategy. The agent must decide whether to exploit its current pricing model (which is generating steady revenue) or explore new pricing strategies to see if it can increase profits further. Balancing these two is essential for maximizing long-term performance.

Now that we've covered the fundamentals, let's explore the different types of RL algorithms.

Key Reinforcement Learning Algorithms for AI Agents

Did you know that certain Reinforcement Learning (RL) algorithms can learn optimal strategies without ever explicitly being told what those strategies should be? Let's explore some of the key algorithms driving ai agent training.

Q-learning is a model-free RL algorithm that focuses on learning the optimal Q-value for each possible state-action combination. In simpler terms, it figures out the best action to take in any given situation by estimating the expected cumulative reward. This value represents the long-term benefit of taking a specific action in a specific state. Being "model-free" means it doesn't need a pre-defined model of the environment's dynamics, making it flexible.

- The Q-value represents the expected cumulative reward of taking a specific action in a specific state.

- Q-learning is particularly useful in scenarios with discrete action spaces, where the agent has a limited number of actions to choose from.

Imagine a robotic arm in a manufacturing plant learning the most efficient way to assemble a product. The agent explores different sequences of movements, and Q-learning helps it determine which movements lead to the highest reward.

What happens when the environment becomes too complex for a simple Q-table? Deep Q-Networks (DQN) come to the rescue by combining Q-learning with deep neural networks.

- DQNs use neural networks to approximate the Q-function, making it possible to handle high-dimensional state spaces.

- To stabilize training, DQN employs techniques like experience replay, where the agent stores and replays past experiences, and target networks, which provide stable targets for learning.

For example, DQN has been successfully used to teach ai agents how to play Atari games, where the agent learns to interpret the game screen and make decisions based on visual input.

Instead of focusing on value functions, policy gradient methods directly learn the optimal policy. These methods are particularly useful when dealing with continuous action spaces.

- Policy gradient methods directly learn the optimal policy without explicitly estimating value functions.

- These methods are particularly well-suited for continuous action spaces and can handle stochastic policies. A stochastic policy means the agent doesn't always pick the single "best" action but rather samples from a probability distribution over actions, which can help it explore more effectively and avoid getting stuck in local optima.

Consider a financial trading system. Instead of predicting values, the agent learns to directly adjust its trading strategy based on market conditions. Examples include REINFORCE, Actor-Critic methods, and Proximal Policy Optimization (PPO).

Now that we've explored these key RL algorithms, let's dive into how these algorithms are implemented in practice.

Benefits of Reinforcement Learning for AI Agent Training

Did you know that Reinforcement Learning (RL) can enable ai agents to master complex tasks without explicit programming? Let's explore how RL unlocks powerful capabilities for ai agent training.

RL agents truly shine when it comes to adapting to dynamic and uncertain environments. Unlike traditional methods that struggle with change, RL agents continuously learn from their interactions.

- A key strength of RL is its ability to adapt to changing conditions and unexpected events by constantly learning from their experiences.

- This adaptability is especially important in real-world scenarios where environments are rarely static or predictable. For instance, a supply chain management system can use RL to adapt to fluctuating demand and unforeseen disruptions.

- RL algorithms are designed to handle noisy data and incomplete information, making them robust to uncertainty.

RL algorithms excel at optimizing for long-term cumulative rewards, enabling them to make strategic decisions that go beyond immediate gains.

- They can effectively manage delayed rewards and complex reward structures, allowing them to learn intricate behaviors.

- This approach is particularly valuable for tasks where immediate rewards don't reflect overall success. An energy management system, for example, can use RL to optimize energy consumption over months, considering factors like weather patterns and energy prices.

- This capability allows RL agents to handle scenarios where short-term sacrifices are necessary for long-term success.

One of the most significant advantages of RL is its ability to learn with minimal labeled data. RL agents primarily learn through trial and error.

- This reduces the cost and effort associated with data collection and annotation, which can be a major bottleneck for other machine learning methods.

- The automation of ai agent training through RL significantly reduces human intervention.

- Consider an automated trading system. The agent can learn trading strategies by interacting with market data and receiving rewards based on its performance, rather than relying on extensive historical data.

Now that we've seen the benefits of using RL for ai agent training, let's explore some real-world applications.

Real-World Applications of Reinforcement Learning in Business Automation

Is it possible for ai to revolutionize how businesses interact with customers and manage their operations? Reinforcement Learning (RL) is making this a reality by providing solutions for a variety of business automation challenges.

While RL offers broad applications, specialized solutions are emerging to help businesses harness its power. One such provider is Compile7, which focuses on developing custom AI agents.

RL can train chatbots to provide more personalized and effective customer service.

- Agents learn to optimize dialogue strategies by receiving rewards for resolving customer issues and penalties for negative interactions. This allows the chatbot to handle a wide array of customer inquiries.

- RL-powered chatbots can adapt to individual customer needs and preferences, improving satisfaction and loyalty. They can learn from each interaction to tailor their responses, creating a more engaging experience.

RL can optimize supply chain operations by learning to predict demand, manage inventory, and schedule deliveries.

- Agents learn to minimize costs and maximize efficiency by receiving rewards for meeting customer demand and penalties for stockouts or excess inventory. This helps in maintaining an optimal balance.

- RL-powered supply chain systems can adapt to changing market conditions and disruptions, ensuring resilience and agility. For example, during a sudden surge in demand, the system can dynamically adjust delivery schedules to prioritize critical orders.

RL can train trading agents to make profitable investment decisions by learning from historical market data.

- Agents learn to identify patterns and trends, and to execute trades at optimal times. They can analyze vast amounts of market data to find opportunities that humans might miss.

- RL-powered trading systems can adapt to changing market dynamics and manage risk effectively. This allows for more informed decisions and potentially higher returns.

As mentioned earlier, RL excels in optimizing processes to gain maximum cumulative rewards, making it ideal for financial applications.

Compile7: Custom AI Agents that Transform Your Business

Is your business struggling with complex automation challenges that traditional ai can't solve? Compile7 offers custom ai agents designed to transform how your business operates by leveraging the power of Reinforcement Learning (RL).

Compile7 develops custom ai agents that automate tasks, enhance productivity, and transform how your business operates.

- We specialize in integrating Reinforcement Learning to build ai agents that are adaptive, intelligent, and goal-oriented. RL allows agents to learn through trial and error, making them capable of handling complex tasks without explicit programming. Our development process involves understanding the specific business problem, defining the state and action spaces, designing a suitable reward function, and then training an RL agent using algorithms like PPO or DQN. We often utilize frameworks like TensorFlow or PyTorch, and leverage cloud platforms for scalable training and deployment.

- Our RL-powered agents excel in complex, dynamic environments where traditional ai solutions fall short. This is particularly beneficial in scenarios where conditions change rapidly and unpredictably, requiring the ai to adapt in real-time.

- We focus on optimizing for long-term cumulative rewards, enabling our agents to make strategic decisions that go beyond immediate gains.

Compile7 offers a range of ai agent solutions tailored to specific business needs.

- Our offerings include Customer Service Agents, Data Analysis Agents, Content Creation Agents, Research Assistants, Process Automation Agents, and Industry-Specific Agents. These agents are designed to enhance various aspects of your business operations, from customer interactions to data-driven decision-making.

- We work closely with our clients to understand their unique challenges and develop custom ai agents that deliver measurable results. This ensures that our solutions are perfectly aligned with your specific business objectives.

- Our agents are designed to improve efficiency, reduce costs, and enhance overall performance.

Compile7 offers a range of AI agent solutions tailored to specific business needs.

- Customer Service Agents: Trained to handle complex queries, personalize interactions, and resolve issues efficiently. For example, one of our clients in e-commerce saw a 20% reduction in customer service response times and a 15% increase in customer satisfaction after deploying our RL-powered chatbot.

- Data Analysis Agents: Capable of identifying patterns, predicting trends, and automating complex data processing tasks. A financial services firm utilized our agents to automate anomaly detection in transactions, reducing false positives by 30% and saving significant analyst time.

- Process Automation Agents: Streamline repetitive tasks and optimize workflows across departments. A manufacturing client implemented our agents to optimize production scheduling, leading to a 10% increase in throughput and a 5% reduction in waste.

Partner with Compile7 to unlock the full potential of ai-powered automation.

- Our team of ai experts will guide you through the process of designing, developing, and deploying custom ai agents that drive business value. We provide end-to-end support to ensure a smooth and successful implementation.

- We offer a comprehensive suite of services, including ai consulting, development, implementation, and support. This ensures that you have the resources and expertise you need to succeed with ai.

- Contact us today to learn how Compile7 can help you transform your business with intelligent ai solutions.

Challenges and Future Directions in Reinforcement Learning

One of the biggest hurdles in Reinforcement Learning (RL) is making the most of limited data. Imagine trying to teach a robot to perform surgery with only a handful of practice runs. How can we make RL more efficient?

RL algorithms often demand vast amounts of training data to achieve optimal performance. This can be impractical, especially when dealing with real-world scenarios where data collection is costly or time-consuming.

Improving sample efficiency is a key focus in RL research. The goal is to develop techniques that allow agents to learn effectively from fewer interactions with the environment.

Techniques like transfer learning, where knowledge gained from one task is applied to another, can significantly reduce the amount of data needed. Similarly, imitation learning enables agents to learn from expert demonstrations, while model-based RL uses learned models to simulate and plan, further enhancing data efficiency.

Designing effective reward functions is crucial for guiding RL agents towards desired behaviors. A well-crafted reward function ensures that the agent learns the appropriate strategy to achieve its goals.

Poorly designed reward functions can lead to unintended consequences or suboptimal performance. For example, a poorly designed reward system for a cleaning robot might incentivize it to simply move objects around rather than actually cleaning them.

Techniques like reward shaping, where intermediate rewards are provided to guide learning, can improve reward function design. Curriculum learning, which involves gradually increasing the complexity of the task, and inverse reinforcement learning, where the reward function is learned from expert behavior, are also valuable tools.

Explainability and interpretability are becoming increasingly important for RL applications. As RL agents are deployed in critical domains, understanding their decision-making process becomes essential.

Understanding why an agent makes a particular decision can help build trust and identify potential biases or flaws. This is especially important in areas like healthcare, where decisions can have life-altering consequences.

Research is ongoing to develop methods for visualizing and explaining RL agent behavior. Techniques include attention mechanisms, rule extraction, and sensitivity analysis.

As we look ahead, addressing these challenges will be critical for unlocking the full potential of RL in business automation.

Conclusion: Embracing Reinforcement Learning for the Future of AI Agents

Reinforcement Learning (RL) is a transformative force reshaping ai agent training and business automation, and its potential is vast.

RL is revolutionizing the way ai agents are trained, enabling them to solve complex problems and adapt to dynamic environments.

As RL algorithms continue to improve, they will play an increasingly important role in business automation and intelligent decision-making. RL excels in optimizing processes to gain maximum cumulative rewards, making it ideal for diverse applications.

Businesses that embrace RL will gain a competitive advantage by leveraging the power of adaptable and goal-oriented ai agents.

We encourage business leaders and ai strategists to explore the potential of RL for their specific needs. A key strength of RL is its ability to adapt to changing conditions and unexpected events by continuously learning from its experiences.

Identify areas where ai agents can enhance efficiency, reduce costs, and improve decision-making. The automation of ai agent training through RL significantly reduces human intervention.

Partner with ai experts to design, develop, and deploy RL-powered solutions that drive business value.

Online courses and tutorials on Reinforcement Learning, such as the Hugging Face Deep RL Course, offer paths to learn the theory and practical aspects of Deep Reinforcement Learning.

Research papers and articles on the latest advancements in RL algorithms.

Open-source RL libraries and frameworks for experimentation and development.

RL inherently focuses on long-term reward maximization, which makes it apt for scenarios where actions have prolonged consequences.

Embracing RL is a strategic move toward a future where ai agents autonomously optimize and adapt to evolving business landscapes.