Explainable AI (XAI) for Agent Transparency: Building Trust in AI-Powered Automation

The Imperative of Transparency in AI Agents

Imagine an ai agent making critical business decisions, but its reasoning remains a mystery. This "black box" problem is becoming increasingly prevalent, highlighting the urgent need for transparency in ai.

ai agents are now being used for vital business functions, from customer service to financial analysis.

Complex ai models, particularly those using deep learning, often function as "black boxes." IBM explains that these models are created directly from data, making it difficult to understand how they arrive at specific results.

This lack of transparency poses significant challenges for building trust, ensuring accountability, and meeting regulatory compliance requirements. For example, regulations like GDPR and the upcoming AI Act are increasingly demanding transparency in automated decision-making.

Building trust is essential for the widespread adoption of ai agents. If users don't understand how an ai agent makes decisions, they are less likely to trust and rely on its outputs.

Explainability allows users to validate ai agent decisions. This is particularly important in sectors like healthcare, where understanding the reasoning behind a diagnosis is crucial.

Transparency helps to identify and mitigate potential biases and errors in ai agent behavior, ensuring fairer and more reliable outcomes.

Improved decision-making stems from a better understanding of ai agent outputs, leading to more informed and effective strategies.

Reduced risk of errors and biases can lead to better business results, as ai agents are less likely to make flawed or discriminatory decisions.

Enhanced compliance with regulations and ethical guidelines is facilitated by the transparency of XAI, helping organizations avoid legal and reputational risks.

As Salesforce puts it, Explainable AI is, "ai that can justify its decisions in human-understandable terms".

Now that we've established why transparency is so critical let's explore how Explainable AI can help achieve it.

What is Explainable AI (XAI)?

Did you know that some ai models are so complex that even their creators don't fully understand how they arrive at decisions? This "black box" problem is where Explainable AI (XAI) comes to the rescue.

XAI encompasses methods and techniques that make ai models more understandable to humans. It's about opening up the "black box" and shedding light on the inner workings of ai.

- XAI aims to provide insights into how ai models arrive at specific decisions or predictions. Instead of just accepting an ai's output, XAI helps us understand why that output was generated.

- XAI bridges the gap between complex ai algorithms and human comprehension. This allows users, developers, and stakeholders to understand, trust, and effectively manage ai systems.

While traditional ai focuses on achieving high performance and accuracy, it often sacrifices transparency. XAI, on the other hand, prioritizes both performance and explainability.

- Traditional ai focuses on performance and accuracy, often sacrificing transparency. The goal is to get the right answer, regardless of how it's achieved.

- XAI prioritizes both performance and explainability. It aims to create ai models that are not only accurate but also understandable.

- XAI implements specific techniques to ensure each decision can be traced and explained. This might involve using simpler models, adding explanation modules, or employing post-hoc analysis methods.

- Compile7 develops custom ai agents that automate tasks, enhance productivity, and transform how your business operates. Compile7's offerings, such as their Customer Service Agents, Data Analysis Agents, Content Creation Agents, Research Assistants, Process Automation Agents, and Industry-Specific Agents, leverage XAI to ensure their agents are transparent and trustworthy. See how Compile7's ai agents can help you.

While often used interchangeably, interpretability and explainability have distinct meanings in the context of ai.

- Interpretability refers to the degree to which a human can understand the cause of a decision. It's about being able to intuitively grasp how the model works.

- Explainability goes a step further, explaining how the ai arrived at the result. It involves providing specific reasons and justifications for the model's output.

- Explainability provides insights into the model's reasoning process, offering a detailed account of the factors and steps that led to the decision. In essence, it answers the question, "Why did the ai do that?".

Now that we understand what XAI is, let's explore the practical techniques used to achieve this transparency in ai agents.

XAI Techniques for Enhancing Agent Transparency

Ever wondered how ai agents arrive at their conclusions? Explainable AI (XAI) offers a peek under the hood, using various techniques to make these processes transparent.

Let's explore some of the key XAI techniques that can enhance agent transparency.

When it comes to explaining ai agents, there are two main approaches: model-agnostic and model-specific methods.

- Model-agnostic methods are like universal translators; they can be applied to any ai model, regardless of its internal structure. Examples include LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations).

- Model-specific methods, on the other hand, are tailored for specific types of models. Rule extraction for decision trees is a prime example, providing direct insights into how these models make decisions. Rule extraction for decision trees involves converting the tree's structure into a set of IF-THEN rules that are easier for humans to understand.

- The choice between these methods hinges on the complexity and nature of the ai agent. Simpler models might benefit from model-specific techniques, while complex "black box" models often require the flexibility of model-agnostic approaches.

LIME is like having a magnifying glass for ai predictions. It focuses on explaining individual predictions by approximating the ai model locally with an interpretable model.

- LIME highlights the features that contribute most to a specific prediction. It essentially creates a simplified, understandable model around a single data point.

- This is particularly useful for understanding why an ai agent made a particular decision in a specific instance. For example, in fraud detection, LIME can reveal why a certain transaction was flagged as suspicious.

- LIME helps to build trust in ai agents by showing users exactly which factors influenced a specific outcome, fostering greater confidence in the ai's decision-making process.

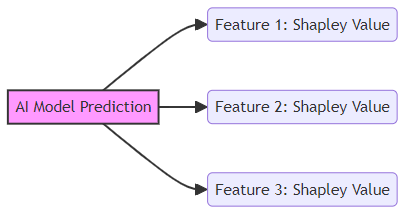

SHAP takes a game theory approach to explainable ai. It assigns each feature a Shapley value, representing its contribution to the prediction, much like fairly distributing winnings in a game.

- SHAP provides a consistent and accurate measure of feature importance, ensuring that each feature's contribution is fairly represented.

- It can be used for both local and global explanations, offering a comprehensive view of feature importance across the entire model or for individual predictions.

- SHAP values can also help identify biases in the model, ensuring fairer and more equitable outcomes.

Partial Dependence Plots (PDP) offer a visual way to understand the relationship between a feature and the predicted outcome. It averages out the effects of other features to isolate the impact of the feature in question.

- PDP helps understand the marginal effect of a feature on the ai agent's predictions. This is useful for identifying the optimal range for a particular feature to achieve the desired outcome.

- It can reveal non-linear relationships and interactions between features, providing a deeper understanding of the ai agent's behavior. For instance, in retail, a PDP might show how a promotional discount affects sales, revealing the point at which the discount becomes ineffective.

These XAI techniques are essential tools for unlocking the "black box" of ai agents. Understanding these techniques can help build trust and improve decision-making.

Now that we've explored some XAI techniques, let's delve into the practical applications of explainable ai in various industries.

Implementing XAI in AI Agent Development

Want ai agents that not only perform well but also explain why they made a particular decision? Implementing Explainable AI (XAI) during the development process is key to achieving this level of transparency and trust.

Incorporating XAI early in the ai agent development lifecycle can yield significant benefits.

- Integrate XAI tools and techniques from the outset; this ensures that explainability is a core design principle rather than an afterthought. For instance, when designing a fraud detection ai agent, include LIME or SHAP analysis from the initial model training phase to understand which features the model relies on to flag suspicious transactions.

- Use XAI to validate model behavior and identify potential issues. By continuously monitoring explanations, developers can detect unexpected or undesirable behavior, such as biases or errors in the model's reasoning.

- Automate the generation of explanations to ensure continuous monitoring. Set up automated processes to generate explanations for each decision made by the ai agent. This allows for real-time monitoring and proactive identification of potential issues, ensuring that the agent remains transparent and reliable over time.

Generating explanations is only half the battle; presenting them effectively is equally important.

- Tailor explanations to the target audience. A data scientist might appreciate detailed feature importance plots, while a business user might prefer a simple summary of the key factors influencing a decision. For example, to a data scientist, you might show a SHAP plot detailing the exact contribution of each feature to a loan denial. To a business user, you'd present a simpler explanation like, "The loan was denied primarily due to a low credit score and a recent history of late payments."

- Use visualizations to communicate complex information effectively. Visual aids such as partial dependence plots or SHAP summary plots can help stakeholders quickly grasp the key drivers behind an ai agent's decisions.

- Provide clear and concise explanations that are easy to understand. Use plain language and avoid technical jargon when communicating explanations to non-technical users.

- Focus on the most relevant features and factors influencing the decision. Highlight the top features that contributed most to the ai agent's decision, making it easier for users to understand the reasoning process.

Implementing XAI is not without its challenges.

- One challenge is the computational cost of XAI methods, especially for large datasets. Techniques like SHAP, while powerful, can be computationally intensive.

- The complexity of interpreting and communicating explanations can also be a hurdle. Finding the right balance between detail and simplicity is crucial for ensuring that explanations are both accurate and understandable.

- Ensuring the fidelity of explanations, i.e., that they accurately reflect the model's behavior, is another key challenge. Developers need to validate that the explanations generated by XAI methods are consistent with the underlying model's decision-making process.

By proactively addressing these challenges, organizations can successfully integrate XAI into their ai agent development workflows and enhance the transparency and trustworthiness of their ai systems.

Now that we've covered the implementation of XAI, let's explore its applications across various industries.

XAI for Specific AI Agent Applications

Is your ai agent truly helping customers, or is it just adding another layer of complexity? Explainable AI (XAI) can ensure your ai isn't just intelligent but also transparent, building trust and improving outcomes across various applications.

Imagine a customer asking a chatbot why their credit card application was denied. XAI can step in to provide a clear, understandable explanation, such as "Your application was denied due to a low credit score and a recent history of late payments."

- Explainable AI can help customers understand why a chatbot provided a specific response. Instead of generic answers, customers receive personalized explanations based on the AI's reasoning.

- Transparency can increase customer trust and satisfaction. When customers understand why an ai made a certain decision, they are more likely to trust the system and feel satisfied with the interaction.

- XAI can identify and mitigate biases in chatbot responses. By examining the factors influencing the ai's decisions, companies can ensure that their chatbots are fair and unbiased.

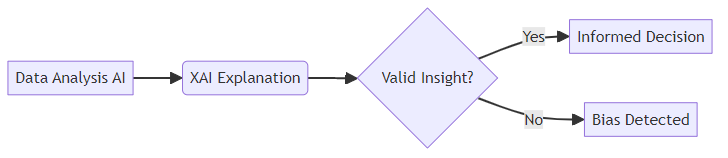

Data analysis ai can uncover hidden patterns, but are those insights reliable? XAI helps ensure data-driven decisions are based on valid and understandable reasoning.

- XAI can help data analysts understand the factors driving insights generated by ai models. Instead of blindly accepting the ai's output, analysts can validate the reasoning behind the insights.

- Transparency can prevent misinterpretations and ensure the validity of findings. By understanding how the ai arrived at a particular conclusion, analysts can avoid costly errors and make more informed decisions.

- XAI can identify potential biases in data analysis ai. For example, if an ai model consistently undervalues properties in a specific neighborhood, XAI can help uncover and correct this bias.

Process automation driven by ai can streamline operations, but what happens when something goes wrong? XAI can provide the necessary transparency to ensure reliability and accountability.

- XAI can help users understand why an ai agent made a specific decision in an automated process. For example, if an ai agent rejects an invoice, XAI can explain that it was due to a mismatch in the purchase order number.

- Transparency can improve the reliability and accountability of automated processes. By understanding the ai's reasoning, users can identify and fix errors in the automated workflow.

- XAI can identify potential errors and biases in process automation ai. For instance, if an ai agent consistently prioritizes certain suppliers over others, XAI can help uncover whether this is due to legitimate factors or hidden biases.

As ai becomes more integrated into various applications, XAI is essential for fostering trust, ensuring accountability, and improving overall outcomes. We'll now explore XAI's role in research assistance and content creation.

The Future of XAI and Agent Transparency

The future of ai agents isn't just about intelligence; it's about building trust through transparency. Let's explore what lies ahead for Explainable AI (XAI) and agent transparency.

XAI research is rapidly evolving to meet the demands of increasingly complex ai systems.

- One key trend is the development of more efficient and scalable XAI methods. As ai models grow larger, XAI techniques must keep pace to provide timely and relevant explanations.

- Another trend involves the integration of XAI into ai agent training processes. Rather than being an afterthought, explainability is becoming a core design principle.

- The development of standardized XAI metrics and benchmarks is anticipated to enable better comparison and evaluation of different XAI techniques.

XAI is not just a technical tool; it's a cornerstone of responsible ai development.

- XAI is a key enabler of responsible ai practices, helping organizations to ensure that their ai systems are fair, accountable, and transparent.

- Transparency and explainability are essential for building ethical and trustworthy ai systems. Users need to understand how ai decisions are made to trust them.

- XAI can help organizations comply with emerging AI regulations, which increasingly require transparency and explainability.

The future belongs to organizations that embrace XAI.

- Organizations need to invest in XAI tools and expertise to effectively implement explainable ai. This includes training data scientists and ai developers in XAI techniques.

- Ai developers need to prioritize transparency and explainability in their designs, making XAI a core part of the development process.

- Users need to be educated about the benefits and limitations of XAI, fostering realistic expectations and informed decision-making.

As ai continues to transform industries, XAI will be essential for ensuring that these technologies are used responsibly and ethically.

Conclusion

Is Explainable AI just a passing trend, or is it here to stay? As ai agents become more integrated into our lives, the need for transparency is more critical than ever.

XAI is crucial for building trust in ai systems. As previously discussed, if users understand how an ai makes decisions, they are more likely to rely on its outputs.

Transparency enhances decision-making and reduces the risk of errors. For instance, in financial services, understanding the reasoning behind a loan approval can lead to more informed and effective strategies.

Implementing XAI requires a strategic approach and investment in appropriate tools and expertise. Organizations need to train data scientists and ai developers in XAI techniques to ensure its effective integration.

As ai becomes more pervasive, XAI will play an increasingly important role in shaping its development and deployment. This includes the development of more efficient and scalable XAI methods to handle larger ai models.

Prioritizing transparency and explainability is essential for realizing the full potential of ai while mitigating its risks. As ai regulations emerge, XAI will help organizations comply with requirements for transparency.

The future of ai is inextricably linked to the advancement and adoption of XAI. Embracing XAI is not just a technical choice but a commitment to responsible ai development.

By prioritizing transparency and investing in XAI, organizations can build ai systems that are not only intelligent but also trustworthy and beneficial for all.