Mastering Custom AI Model Optimization: A Guide for Business Leaders

Is your ai delivering real business value, or just costing you money? Optimizing custom ai models is critical for achieving peak performance and maximizing your return on investment. Let's explore why this is so important.

Enhanced Performance: Optimization leads to faster response times and greater accuracy. Imagine an ai-powered diagnostic tool in healthcare providing quicker, more reliable results to doctors.

Cost Reduction: Efficient models use fewer computational resources, which significantly lowers operational costs. This is especially important for smaller businesses.

Scalability: Optimized models handle increased data and user loads without performance bottlenecks. Think of a retail application smoothly managing peak holiday shopping traffic.

Competitive Advantage: Superior ai capabilities differentiate you from competitors, offering better services and solutions.

Increased Efficiency: Ai-driven automation streamlines business processes, freeing up human capital for strategic tasks.

Improved Decision-Making: Accurate ai insights enable better-informed strategic planning and resource allocation.

Enhanced Customer Experience: Personalized interactions boost customer satisfaction and loyalty.

Higher Revenue Generation: Optimization of ai-driven sales and marketing initiatives directly translates to increased revenue.

According to IBM, fast-growing organizations drive 40% more revenue from personalization than their more slowly moving counterparts IBM. A well-optimized ai model is not just a technical asset; it's a strategic tool for business growth.

Next, we will delve into the specific strategies and techniques required to master custom ai model optimization.

Foundational Optimization Techniques

Is your ai model underperforming? Foundational optimization techniques are vital for creating custom ai models that deliver real business value. Let's explore the core strategies that drive ai success. The first core strategy we'll explore is data preprocessing.

Data preprocessing is the bedrock of any successful ai model. It focuses on ensuring that the data you feed your model is clean, relevant, and properly formatted.

- Data Quality: Clean, accurate, and relevant data is crucial. Poor data leads to skewed results.

- Feature Selection: Identify and select the most impactful features. Feature selection improves model efficiency and accuracy by focusing on the most relevant data points.

- Data Transformation: Apply techniques like scaling and encoding. Scaling ensures that all input features contribute equally to the model, while encoding converts categorical data into numerical form, which machine learning algorithms can process.

- Handling Imbalanced Data: Implement strategies for dealing with uneven class distributions. Addressing imbalance prevents the model from being biased towards the majority class.

Selecting the right model architecture is equally important. The choice depends on the specific problem you're trying to solve and the nature of your data.

- Algorithm Suitability: Match the ai algorithm to the problem and data. For instance, time series data might benefit from Recurrent Neural Networks (RNNs), while image recognition often utilizes Convolutional Neural Networks (CNNs) (Image-based time series forecasting: A deep convolutional neural ...)

- Complexity vs. Performance: Balance model complexity with computational efficiency. Overly complex models can lead to overfitting and increased computational costs.

- Transfer Learning: Leverage pre-trained models to accelerate development and improve accuracy. Transfer learning is especially beneficial when you have limited data.

Hyperparameter tuning involves finding the optimal settings for your chosen model. This process significantly impacts model performance.

- Grid Search: Systematically explore hyperparameter combinations. Grid search is exhaustive, but can be computationally expensive.

- Random Search: Efficiently sample hyperparameter space. Random search is often more efficient than grid search, especially in high-dimensional spaces.

- Bayesian Optimization: Smartly search for optimal hyperparameters based on past results. Bayesian optimization uses a probabilistic model to guide the search, making it more efficient than random search.

- Tools and Frameworks for Hyperparameter Tuning: Utilize libraries like Optuna and Hyperopt for automated tuning. These tools automate the hyperparameter tuning process, saving time and effort.

Mastering these foundational techniques sets the stage for advanced optimization strategies.

Advanced Optimization Strategies

Is your ai model as lean and efficient as it could be? Advanced optimization strategies can significantly reduce model size and complexity, leading to faster inference and lower operational costs.

Model pruning is a technique that reduces the size and complexity of ai models by removing less important parameters. Think of it as trimming the fat to improve performance.

- Weight Pruning: This involves removing connections in neural networks that have minimal impact on the model's output. By zeroing out these less significant weights, the model becomes sparser and more efficient.

- Neuron Pruning: This takes it a step further by eliminating entire neurons that contribute little to the model's accuracy. Removing redundant neurons simplifies the model structure.

- Benefits of Pruning: Pruning lowers computational costs and improves inference speed. Smaller, more efficient models are easier to deploy on resource-constrained devices, such as mobile phones or iot devices.

Quantization is another powerful optimization technique that focuses on reducing the precision of the model's weights and activations.

- Post-Training Quantization: This converts floating-point weights to lower-precision integers. For example, converting 32-bit floating-point numbers to 8-bit integers can significantly reduce model size and speed up computation. This works because lower-precision numbers require less memory to store and can be processed using faster arithmetic operations. (Model Compression and Optimization: Techniques to ...).

- Quantization-Aware Training: This involves training models with quantization in mind for better accuracy. By simulating quantization during training, the model learns to be more robust to the effects of reduced precision.

- Trade-offs: There is a balance between precision reduction and potential accuracy loss. It's important to carefully evaluate the impact of quantization on model performance to ensure that accuracy remains acceptable.

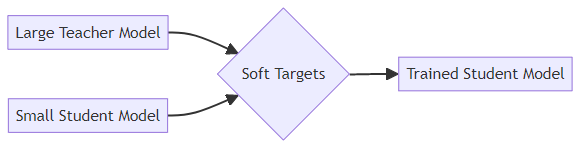

Knowledge distillation involves training a smaller model to mimic the behavior of a larger, more complex model. This allows you to achieve comparable performance with a significantly smaller model.

- Teacher-Student Framework: A smaller "student" model learns to mimic the behavior of a larger "teacher" model. The teacher model provides soft targets, which contain more information than hard labels, allowing the student model to learn more effectively.

- Benefits of Distillation: Distillation achieves comparable performance with significantly smaller models. This is particularly useful when deploying lightweight models on resource-constrained devices.

- Use Cases: Knowledge distillation is ideal for deploying efficient models on devices like smartphones, embedded systems, or edge computing platforms.

These advanced optimization strategies are essential for deploying ai models that are both powerful and efficient. Next, we will explore how to fine-tune your custom ai models for optimal performance.

Fine-Tuning for Specific Applications

Is your ai model not quite hitting the mark? Fine-tuning allows you to mold a pre-trained model to excel in specific applications. Let's explore how you can tailor your ai for optimal performance.

Supervised Fine-Tuning (SFT) involves training your model using input-output pairs. This teaches the model to produce desired responses for specific inputs.

- It's particularly useful for domain specialization. You can adapt a model for fields like medicine, finance, or law, teaching it to understand industry-specific jargon and nuances. For example, a legal firm might fine-tune a general language model on a corpus of legal documents to improve its ability to draft contracts or summarize case law.

- SFT is also effective for task performance. Optimizing a model for sentiment analysis, code generation, or summarization can significantly improve its accuracy. For example, you could fine-tune a model to extract key insights from customer reviews, providing actionable feedback for product development.

- Many projects start with SFT, as it addresses a broad range of fine-tuning scenarios and delivers reliable results with clear input-output data.

Direct Preference Optimization (DPO) trains models to prefer certain responses over others. This is achieved by learning from comparative feedback.

- DPO excels at improving response quality, safety, and alignment with human preferences. For instance, you can use DPO to ensure that a customer service chatbot provides helpful and harmless responses, avoiding potentially offensive or misleading statements. A company might use DPO to train a chatbot to always offer polite and empathetic responses, even when dealing with frustrated customers.

- You can also use DPO to adapt models to a specific style and tone. This is useful for aligning a model's communication style with a brand's voice or adapting it to cultural preferences. For example, a financial institution might use DPO to fine-tune a model to communicate in a clear, concise, and professional manner, building trust with its clients.

- DPO eliminates the need for a separate reward model.

Reinforcement Fine-Tuning (RFT) uses reinforcement learning to optimize models based on reward signals. This allows for more complex optimization objectives.

- RFT is best for complex optimization scenarios where simple input-output pairs aren't sufficient. Consider a scenario where you want to train an ai model to play a complex strategy game. Input-output pairs alone won't suffice; the model needs to learn through trial and error, receiving rewards for making strategic moves and penalties for poor decisions.

- RFT is ideal for objective domains like mathematics, chemistry, and physics where there are clear right and wrong answers. For example, you could use RFT to train a model to solve complex equations or design new molecules with specific properties.

- Keep in mind that RFT requires more machine learning expertise to implement effectively.

With these fine-tuning techniques in your toolkit, you're well-equipped to tailor your custom ai models to meet your specific business needs. Next, we will delve into strategies for evaluating the performance of your optimized ai models.

Real-World Examples and Case Studies

Can optimized ai models truly transform a business, or are they just hype? Let's explore some real-world examples that highlight the transformative power of optimized ai.

Many organizations struggle with customer service ai, leading to slow response times and inaccurate answers. Implementing model pruning and knowledge distillation can reduce model size and improve inference speed. This means customer inquiries get resolved faster, and the chatbot can handle more complex questions, leading to happier customers.

High computational costs and long training times can hinder data analysis ai. Applying feature selection and hyperparameter tuning optimizes model performance, which reduces training time and improves prediction accuracy. A more efficient data analysis model allows businesses to gain insights more quickly, leading to better decision-making.

Compile7 specializes in developing custom ai agents that automate tasks, enhance productivity, and transform how your business operates. Offering custom-built ai solutions, Compile7 empowers businesses to create personalized content at scale, driving engagement and conversions. Leverage Compile7's ai-powered content creation agents to tailor messaging, product descriptions, and marketing materials to individual customer preferences. Contact Compile7 today to discover how our ai agents can revolutionize your content strategy and deliver unparalleled personalization.

These examples demonstrate how optimizing custom ai models can lead to tangible improvements in various business functions. Next, we will explore strategies for evaluating the performance of your optimized ai models.

Monitoring and Continuous Improvement

Is your ai model getting better over time, or is it slowly losing its edge? Monitoring and continuous improvement are essential to keep your custom ai models performing at their best.

Effective monitoring means keeping a close eye on several key performance indicators. These metrics provide insights into your model's health and help you identify areas for improvement.

- Accuracy: This measures how often your ai model makes correct predictions. For example, in a medical diagnosis ai, accuracy reflects how often the model correctly identifies diseases.

- Latency: Latency refers to the time it takes for your model to generate a response. High latency can frustrate users, so it's crucial to identify and resolve bottlenecks.

- Resource Utilization: Track cpu, memory, and gpu usage to ensure your model is efficient. Overuse of resources can lead to higher operational costs and performance issues.

- Tools: Platforms like Prometheus and Grafana help visualize these metrics. These tools enable you to set up alerts and dashboards to monitor performance in real time.

ai models are not static; they need to adapt to changes in the data they process. Retraining ensures your model remains accurate and relevant over time.

- Data Drift: This occurs when the distribution of input data changes. For instance, a fraud detection model might see new patterns of fraudulent activity that it wasn't trained on.

- Concept Drift: Concept drift happens when the relationship between input and output variables shifts. For example, customer preferences in a recommendation system might evolve over time.

- Retraining Strategies: Implement automated retraining pipelines to maintain model accuracy. An automated retraining pipeline typically involves setting up a system that periodically checks for performance degradation or data drift, triggers a retraining process using updated data, and then deploys the newly trained model. This often includes data validation, model training, and evaluation steps. Regular retraining with updated data helps your model adapt to new patterns and trends.

By continuously monitoring and retraining your ai models, you can ensure they deliver sustained value to your business. Now, let's explore the future of ai and what it holds for businesses.

The Future of AI Model Optimization

The future of ai model optimization is rapidly evolving, presenting both exciting opportunities and complex challenges for business leaders. As ai continues to permeate various industries, staying ahead of emerging techniques and addressing ethical considerations will be crucial for sustained success.

Several innovative techniques are poised to reshape ai model optimization:

- Automated Machine Learning (AutoML) simplifies optimization. It automates feature engineering, model selection, and hyperparameter tuning. This allows businesses to deploy high-performing ai models more quickly and efficiently.

- Neural Architecture Search (NAS) automatically designs optimal neural network architectures. Nas tailors the architecture to specific tasks. This results in more efficient and accurate models.

- TinyML enables ai on ultra-low-power devices. Tinyml brings ai to edge applications. This opens new possibilities for real-time data processing and decision-making in remote or resource-constrained environments.

The advancements in these powerful optimization techniques make it even more critical to consider the broader implications of their development and deployment. As ai becomes more integrated into business processes, ethical considerations are paramount:

- Bias Mitigation ensures fairness and prevents discrimination in ai models. Businesses must proactively identify and correct biases in training data and algorithms to avoid perpetuating societal inequalities. Common methods include using fairness metrics to detect bias and applying debiasing techniques during training or post-processing.

- Transparency and Explainability makes ai decisions understandable and accountable. Explainable ai helps build trust by allowing users and stakeholders to understand why a model made a particular decision. It also facilitates compliance with regulatory requirements, such as those found in gdpr or industry-specific standards that mandate a degree of transparency in automated decision-making.

- Data Privacy protects user data. Businesses must comply with privacy regulations like gdpr and ccpa. They also need to implement robust security measures to protect sensitive information, such as data anonymization or differential privacy techniques.

- Security protects your ai model from adversarial attacks. ai models can be compromised through methods like adversarial attacks (subtly altering input data to cause misclassification) or data poisoning (contaminating training data). Businesses must implement security measures to prevent malicious use, such as input validation and robust model deployment practices.

Failing to address these ethical considerations can lead to legal, reputational, and financial risks.

In conclusion, the future of ai model optimization involves embracing emerging techniques while prioritizing responsible and ethical practices. By staying informed and proactively addressing these challenges, businesses can leverage ai to drive innovation and create value in a way that benefits both their organization and society as a whole.